Saturday, December 6, 2008

Geneva for Claims Based Security

T-SQL and MINUS operator

What I was amazed to know that T-SQL does not support MINUS operator. MINUS is one of the SET operations. There are definitely some workarounds that you can think of. But all of them asks you to change the natural way of getting the result set using some TECHNIQUES which I don't like.

What do you say about this? Do you want to support this or you have some alternate thoughts about this?

Windows Workflow Foundation (Windows WF) - Lesson 1

Workflow Types:

There are two types of workflow. They are as follows:

1. Sequential workflow

2. State machine based workflow

The difference between a sequential workflow and state machine based workflow is the same as the difference between a flowchart and graph.

Workflow Authoring Modes:

There are four authoring modes of workflow:

1. Markup only:

It is based on XAML which defines workflow structure logic and data flow.

2. Markup and Code:

XAML defines workflow and code beside defines extra logic. The code is written in a .net compliant language e.g. VB.net or C#.

3. Code Only:

The workflow is created by code in constructor.

4. Application generated:

Application creates activity tee and serializes into XAML or code (C# or VB.net)

Workflow developed through these authoring tools are compiled through a workflow compiler. The compiler for workflow is wfc.exe. The workflow compiler passes this on to C# or VB compiler, depending on the language used by developer, which compiles the code in the workflow. A .net assembly is generated with *.ctor extension which defines the workflow.

Inversion of Control (Dependency Injection)

1. IoC Type I (Interface Injection)

2. IoC Type II (Setter Injection)

3. IoC Type III (Constructor Injection)

Though it is difficult to remember these types but I recommend you to remember these as these are commonly used during conversation between software architecture designers. This is part of architect’s language.

In Inversion of control the library only does part job by specifying an abstract solution. For the rest of the solution, they have to rely on the code using that library. i.e. generic code has to call to application specific code. It is explained as Hollywood principle: “Don’t call us, we will call you”. So, in Inversion of control based frameworks, it is the reusable code which calls application specific client code.

Inversion of control is about calling user code from library code. Earlier we used to do this in the form of function pointers. In this case, library defines only the abstract implementation of task and more concrete implementation is provided by the user code. Other option to provide decoupling is the Service Locator option. The difference is the way they provide the implementation class to the user of the library. Service locator hides the implementation classes but at least you need to be able to see the locator. So instead of many couplings, you have this additional coupling with the locator.

Inversion of control is not a new concept but it has already been there. Event driven programming is one application of Inversion of Control. You can see that you define event handlers which are then called by the framework following the Hollywood principle. In Microsoft world, this concept is presented as dependency injection. In functional programming, monad is an example of IoC.

Friday, October 17, 2008

SSIS Multithreading (Parallel execution in SSIS)

There are a few properties of package (Just right click control flow designer and select Properties).

MaxConcurrentExecutables:

This specifies the maximum number of execution flows allowed in parallel at any point in the package. It specifies the maximum number of threads that a package can create for concurrent execution. Remember that we can not specify 0 (Zero) for this property because this would mean zero thread meaning no execution flow.

The default value is -1. This means 2 more than number of processors.

You might be wondering about scenarios where the number of parallel execution flows might be greater than the number of threads specified. In all such cases, the remaining threads would have to wait until the time any thread completes execution.

EngineThreads:

This property is used to incorporate parallelism into data flow tasks. It has a default value 10. Be careful in specify the value. This is because this property, along with MaxConcurrentExecutables, decides the number of parallel execution flows in a package simultaneously.

Additionally two properties of Dataflow tasks can be used to specify Temporary storage to any faster disks. These properties are BLOBTempStoragePath and BufferTemppStoragePath.

Tuesday, October 14, 2008

Online indexes in SQL Server

In order to allow modification and querying data during the index creation, SQL Server maintains versions of rows in a Version Store. You can check size of version store by using VERSION STORE SIZE counter in performance monitor.

Now you have two options for creating indexes. They are as follows:

1. Online

2. Offline

It must be remembered that online indexes are only available in Enterprise edition of SQL Server.

With offline indexes any DML operations is not allowed. This is done by acquiring EXCLUSIVE lock on the table or indexed view. So for the whole duration of creation of index, no DML operation (including select) is allowed on the object.

There are three phases of creation of an online index. They are as follows:

1. Preparation: In preparation phase, following tasks are carried out:

a. Acquiring of lock mainly S and IS lock on table.

b. Creation of metadata for index.

c. Recompiling DML plans by incrementing version number.

2. Build

3. Final

For creating an index there must be sufficient temporary space available in disk. This space is required for sorting and other intermediate tasks. Extra space is used from disk when SQL Server does not find necessary space in primary memory to hold the temporary data. When SQL Server is using the disk space, it may acquire the space from user database if SORT_IN_TEMPDB option is not set for index creation or modification. There are certain advantages for using tempdb for this purpose. With this option most contiguous space is allocated for index which makes queries faster on the index. Additionally it uses tempdb transaction log.

When creating an index it is also to be made sure that transaction log has sufficient disk space as indexing creating large data loads which might fill the transaction log. It is best if you change the recovery model to SIMPLE or BULK_LOGGED before starting the index operation.

If an index is fragmented then it is either reorganized or rebuilt with ALTER INDEX REORGANIZE or ALTER INDEX REBUILD command. It must be remembered that reorganizing an index is always an online operation but rebuilding an index can be online or offline. For rebuild an index is dropped and a new index is created.

Index creation can also be further controlled with providing hints for maximum degree of parallelism in the CREATE or ALTER index statement. But keep in mind that as the degree of parallelism increases the requirement of memory also increases. So make sure that you have enough memory available.

CREATE INDEX IX_MyIndex

ON MyDB.MyTable (MyID)

WITH (MAXDOP=8);

Note: MAXDOP option can not be specified in ALTER INDEX REORGANIZE statement. Currently, it is always a single threaded operation. You can also specify MAXDOP in sp_configure command. This would apply the parallelism setting on all operations of the server instance.

Saturday, October 4, 2008

SQL Server Browser Service to add connection in Visual Studio

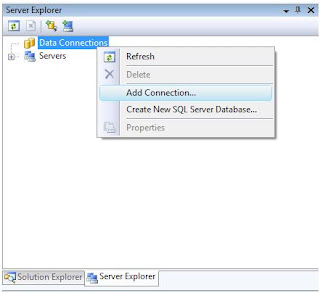

Add a connection in Server Explorer:

Add connection in Server Explorer:

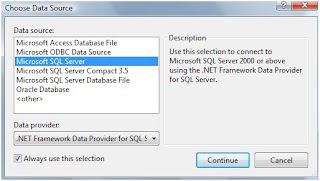

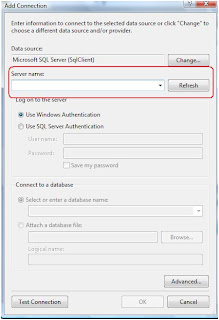

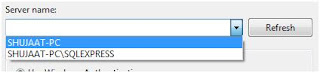

Select SQL Server from the selection list. When you try to select the servers then no server is available. Now you may think that you have SQL Server installed then why not those servers are available for selection.

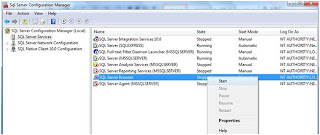

Just start the SQL Server Browser Service. This should solve the problem.

Now you may see that the servers become available in the selection list in Visual Studio.

Friday, October 3, 2008

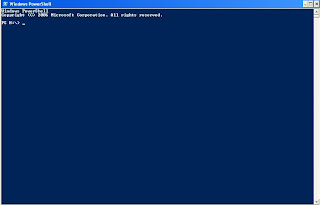

Windows Power Shell Basics

It must be remembered that Windows Power Shell has been built on .net. So you can deal with objects i.e. it can process and return objects as well compared to earlier tools which could only return text.

Windows Power Shell introduced the concept of cmdlet. These are small program designed to perform simple task. They can be combined to perform complex tasks. The tasks may be accessing directories and files, registries, digital certificates etc.

The good thing is that you can use the existing tools like Net and Reg.exe being in the environment of Power Shell. Various Cmd.exe commands are supported inside Windows Power Shell Environment.

The names of cmdlet(s) are very easy to remember. It is always Verb followed by hyphen and then a noun afterwards. The examples are ‘Get-Help’.

We can get help about any cmdlet by using Get-Help. The format is as follows:

Get-Help get-help

One thing to remember is that Windows Power Shell is not case-sensitive.

Monday, September 29, 2008

SSIS Package Deployment Utility

1. File System

2. SQL Server job

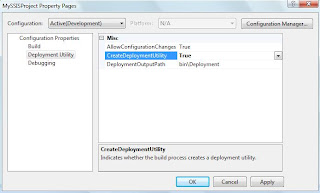

Just take properties of your project and go to Deployment Utility tab.

Set the "Create Deployment Utility" as "True" and specify the "Deployment Path".

As soon as you build your project deployment utility is created in the above specified folder with the package file. The file type of Deployment Utility is "Integration Services Deployment Manifest". The extension of the deployment package is "*.SSISDeploymentManifest".

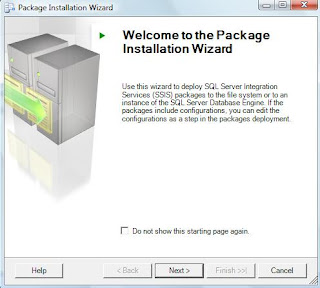

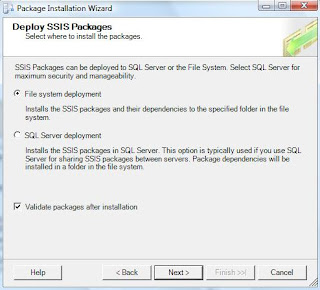

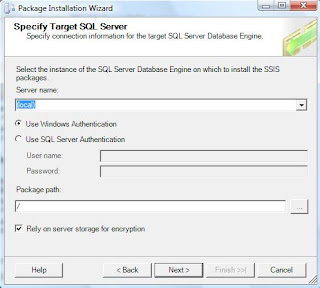

When you run this manifest file. The package deployment wizard is started which helps in deploying the package.

As discussed above, you can also specify the deployment destination for our SSIS package.

If you choose to install in the file system then you just have to specify the destination folder and start the wizard. If you choose otherwise and install in the SQL Server instance, then you have to specify the SQL Server instance in which we want to install this package.

Wednesday, September 24, 2008

SSIS Unit Testing Framework

I have always been displeased being not able to unit test my packages

created in SSIS. This was because there was no way to do this.

But the days of darkness are gone. SQL Server Integration Services

Community has felt this need of the day and has come up with a Unit Testing

Framework. Now guyz! if you are not from development background even then

you don't need to worry because no .net code has to be written to write

your unit tests. All you have to create is an XML files with your commands.

So you don't need to know any .net code. Still I have not found out if I

want to write some .net code then how can I do that.

The thing I liked most is that this Unit Test framework is based on xUnit.

It means it follows the same setUP, test. and tearDown flow. There is also

support for Test Suites. The developers of Microsoft Dynamics AX should be

happy to listen to this news.

To keep ease in consideratino, a GUI has been added to create Unit Tests.

I am copying a sample XML used for this framework from codeplex.

You can download it from the following codeplex.com link:

SSIS Unit Testing Framework

You can see that it is just version 1.0 release in Aug, 2008. So

don't expect it to be all bug free.

You would be glad to know that the utility is available as .

So I can't wait to put my hands on this. Share your experience with me

about this.

Enjoy!

Saturday, September 13, 2008

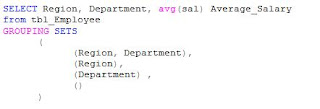

Grouping Set (SQL Server 2008)

Though the same result could be achieved earlier but we have to write different queries and would have to combine them using UNION operator. The result set returned by GROUPING SET is the union of the aggregates based on the columns specified in each set in the Grouping set.

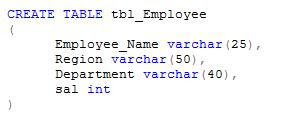

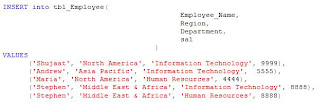

To understand it completely first we create a table tbl_Employee.

Now we populate table with some the following rows:

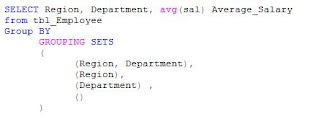

After populating with the rows, we select some rows using Grouping Sets.

The result of this statement is as follows:

You can see that the result set contains rows grouping by each set in the specified Grouping Sets. You can see the average salary of employees for each region and department. You can also appreciate the average salary of employees for the organization (NULL for both Region and Department). This was the result of empty Grouping Set i.e. ().

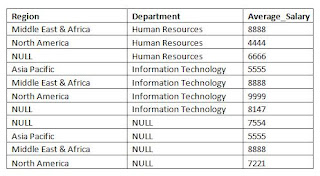

Before 2008, if you had to get the same result set, following query had to be written:

By looking at the above query, you can appreciate the ease provided by Grouping Set to the developer.

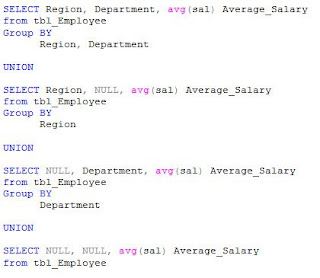

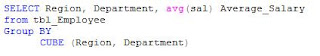

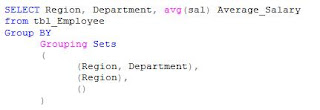

CUBE Sub Clause for Grouping

This is used to return power ‘n’ to 2 for ‘n’ elements.

The above query is equivalent to the following query:

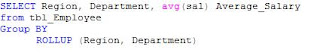

ROLLUP operator for Grouping

This is used to return ‘n+1’ grouping sets for ‘n’ elements in the hierarchy scenario.

This is equivalent to the following query:

Thursday, September 11, 2008

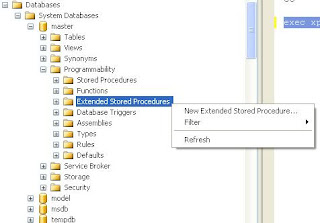

Extended Stored Procedures (From C, C++ DLL)

1. Standards Stored Procedures

2. CLR Stored Procedures

3. Extended Stored Procedures

Today we are going to discuss about Extended Stored Procedures. If you are new to SQL Server world (just a few years old in SQL Server environment) then it might be the first time when you have ever heard of this name. So let’s take a look what they are:

There are sometimes requirement to perform operations which are very difficult or impossible to do in SQL e.g. WIN32 operations like killing a system process, writing to the event log, encrypting data to a database, parsing XML, shelling to the command-line. Back in earlier days, we needed something to support such operations. For these types of operations Extended Stored Procedures were introduced in SQL Server earlier. Now you would say: what is its requirement now, when you can write these operations in managed code using CLR Stored Procedures? But just wait a moment and believe that you are living in a world of SQL Server 7. There is no .net framework yet developed. Now answer how would you do this? Your answer must be using C++ DLLs. Yes! This is the same that Extended Procedures were introduced for.

In SQL Server 2005, even with introduction of CLR Stored Procedures, extended stored procedures remained part of the DBMS. But because of this there always existed a backdoor for hackers into your server because these stored procedures were basically unmanaged code and had known issue of Buffer Overflow.

It is good to know that these stored procedures will no more be part of a new version of SQL Server. All of you, who are planning to move to next version of SQL Server after 2008 version, make this part of plan to rewrite all your extended stored procedures in a CLR compliant language. At least what we can do is that we don’t develop any new logic using extended stored procedures and keep porting our earlier extended stored procedures to CLR.

Enjoy!

Monday, September 8, 2008

Microsoft Project Zermatt (Claims based security in .net)

people's life or it is about their identity. For life saving, we have law

enforcement agencies. For security of identities, different mechanisms do

exist. Microsoft Code Name Zermatt is a step towards the same direction.

In many different applications and APIs there are different ways to build

identity support. Even with .net identity can be seen in different places.

When we have security so widely spread, it is difficult to maintain it. And

that is where hackers and phishers work hard to find a way to get some

place into your application.

Project Zermatt is about developing CLAIMS AWARE applications in .net

environment. It is designed to be simple to understand and open to use.

According to Microsoft, You don't need to be a security expert to implement

security based on Zermatt. A developer should be able to implement its

design based on Zermatt.

Microsoft is talking about identity providers everywhere. In SQL Server it

is doing that with addition of EKM i.e. Enhanced Key Management. Like

there, In Zermatt it has option to add custom identity providers.They are

also referred to as STS i.e. Security Token Services. It provides security

by providing a new CLAIMS MODEL including API support to build custom STS.

These STS implement WS-TRUST protocol.

From the very start of this Project, Micrsoft wanted it to support both B2C

(Business to Consumer) and B2B (Business to Business) Model. For B2C, it

should support ASP.net based application and for B2C, we mean supporting

WCF based applications.

For building Zermatt based applications or services you need to have .net

framework 3.5.

With Visual Guard, People came to the idea of centralized security. But

still at the time of mergers and acquisitions, it is very difficult to

maintain that idea of centralized security and developers had to look at

two different places to verify the identity of a user. By using Zermatt

across the industry, people will no more have this problem. So Zermatt is

towards a step for providing EXTRA FEDERATION for security.

To understand Zermatt first we need to understand what is CLAIMS BASED

security model? This is becaue it is the essence of Zermatt. for claims

based identity mode, user comes with all the information related to his

identity. Just by looking at this identity information user is

authenticated. Remember that this list of claims is also called SECURITY

TOKEN. They are generally signed by issuing authority to provide legitimacy

to the user.

For claims based security model a user needs a list of claims in order to

be authenticated by application (The application doesn't need to receive

username and password any more). The identity of a person is identified

through these claims. These CLAIMS are provided by some AUTHORITY which

have been assigned this role. So based on this discussion, we have

identified three roles.

1. User

2. Claim provision authority

3- Application

These three roles are named as CLIENT, ISSUING AUTHORITY and RELYING PARTY

(also called CLAIMS AWARE or CLAIMS BASED application) respectively in the

context of Project Zermatt. The issuing authority uses SECURITY TOKEN

SERVICE (STS) to provide tokens to clients. You can use built-in or custom

STS. There is complete framework support provided with Zermatt to create

your custom STS. The clients may be smart (active) or Browser based

(passive) clients.

Microsoft has used several WS-* standards to implement Zermatt for smart

clients. They are as follows:

WS-METADATAEXCHANGE:

This is used to retrieve policy by the Relying Party.

WS-POLICY

This is the standard for defining the structure of the policy.

WS-TRUST

This describes the working between smart client and Issuing Authority. This

deals with requesting and issuing security token.

For browser based applications these WS-* based standards are replaced by

HTTP GET, POST and REDIRECT.

In order to understand the tokens issued by the Issuing Authority, the

token must be in a standard format. This has been made easier by adopting

SAML (Security Assertion Markup Language) for security tokens by most

STS(s). SAML is a standard XML standard to represent claims. With this XML

based tokens platform independence for security can be achieved far easier.

This platform independence can appear even bigger if you include the

feature of Single Sign On with it.

Saturday, September 6, 2008

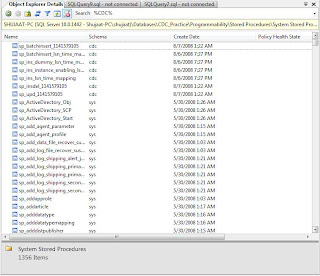

Object Search (SQL Server 2008)

SQL Server 2008 seems to answer our prayers by providing 'Object Search'. The feature is available as part of 'Object Explorer Details'. This window can be used independently and also with 'Object Explorer' window. This provides primitive search of database objects in SQL Server 2008. By 'primitive', I mean it has several limitation which would be felt by professionals using this tool.

To search any object, just type in the search bar in 'Object Explorer Details' window. There is wild card character available. This is same i.e. '%', which means zero or more characters. This character has the same behavior as T-SQL.

Object Explorer Details window can run in isolation or with the Object Explorer window.

Limitations:

1. It is not possible to search a particular type of object e.g. you can not search all stored procedures starting from 'CDC_'.

2. It is not possible to search negation e.g. we can not search all the objects which does not contain 'CDC_'.

3. Software professionals are generally comfortable with regular expressions for search. Regular Expressions are not supported for search.

Friday, September 5, 2008

Resource Governor (SQL Server 2008)

Click: SQL Server 2008 Resource Governor

Thursday, September 4, 2008

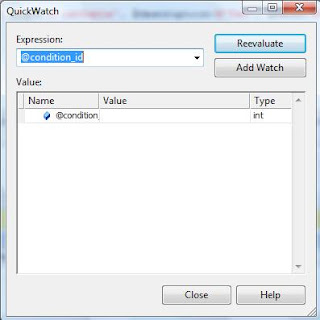

T-SQL Debugger (SQL Server 2008)

Like other debugging tools, we can add break-points in our T-SQL code. Pressing F9 would add the break-point as generally is the standard in other Microsoft tools.

There are different windows available for debugging the code. The windows include:

1. 'Local'

2. 'Call Stack'

3. 'Output'

4. 'Command Window'

5. 'Threads'

6. 'BreakPoints'

7. 'Watch'

You can 'Step Into', 'Step Out' or 'Step Over' the code. There are same short-cut keys available as in other Microsoft tools.

The 'Quick Watch' feature is also available. With this feature, you can evaluate any expression evaluated under the environment of execution of current code.

You can also toggle, delete or disable breakpoints.

Enjoy this feature!

SQL Server Agent Proxy

Click: SQL Server Agent Proxy

Sunday, August 24, 2008

SQL Server Alerts

Click: SQL Server Alert

Saturday, August 23, 2008

Google Custom Search (SQL Server)

Now this information on tip is very good as you can search the world very easily. But to find out the relevant information among the result is very difficult. We need a way so that we could search only the specific sites specializing in the specific field. Google introduced a Custom Search option few years back. Using the same provision, I have created a Custom Search for Microsoft SQL Server.

Google Custom Search

Please visit the above search engine to query your specific terms.

OUTPUT Clause (Composable DML) in SQL Server 2008

There are few updates using the OUTPUT clause in SQL Server 2008. But before that for those of you who are not acquainted with this OUTPUT clause, I want to discuss a little about this clause.

CREATE TABLE CUSTOMER_TBL

(

CustomerID int PRIMARY KEY IDENTITY,

CustomerName varchar(500)

)

Declare @Mytable table (Name varchar(50))

INSERT INTO CUSTOMER_TBL (CustomerName)

OUTPUT inserted.CustomerName as Naam into @Mytable

VALUES ('New Customer')

SELECT * FROM @Mytable

SELECT Name FROM @Mytable GROUP BY Name

With SQL Server 2008's row constructor, this statement can be used as follows:

Declare @Mytable table (Name varchar(50))

INSERT INTO CUSTOMER_TBL (CustomerName)

OUTPUT inserted.CustomerName as Naam into @Mytable

VALUES

('New Customer'),

('Another New Customer'),

('Third Customer'),

('New Customer')

SELECT * FROM @Mytable

SELECT Name FROM @Mytable GROUP BY Name

With 2008, this OUTPUT clause can do miracles because of composable DML feature provided. Let us define a new table :

CREATE TABLE CUSTOMER_ORDERS

(

CustomerID int PRIMARY KEY IDENTITY,

OrderDetail varchar(200),

CustomerName varchar(500)

)

INSERT INTO CUSTOMER_ORDERS(CustomerName)

Select e.CustName from

(

INSERT INTO CUSTOMER_TBL (CustomerName)

OUTPUT inserted.CustomerName as CustName

VALUES('NewCustomer'),

('Another New Customer'),

('Third Customer'),

('New Customer')

)e

In the above statement, all the new Customer Names inserted as part of the inner INSERT statement are used in the above INSERT statement. Any of these statements may be INSERT, UPDATE or DELETE. If you start the SQL Server Profiler trace then you would certainly admire that it is still an atomic operation.

MERGE Statement SQL Server 2008

For the sake of simplicity, we work out an example. Let us have two tables with the following definitions.

CREATE TABLE CUSTOMER_TBL

(

CustomerID int PRIMARY KEY IDENTITY,

CustomerName varchar(500)

)

CREATE TABLE CUSTOMER_ORDERS

(

CustomerID int PRIMARY KEY IDENTITY,

OrderDetail varchar(200),

CustomerName varchar(500)

)

The greatest example of MERGE statement is a scenario in which there are two tables; One is a source table and the other is the target table. All the rows in the source table which has a record with matching keys in child tables, the information in the source tables are needed to be updated.

MERGE INTO CustomerOrders c

USING CustomerTable t on c.CustomerID = t.CustomerID

WHEN matched then update

set c.CustomerName = t.CustomerName

WHEN not matched then

INSERT (CustomerName) values (t.CustomerName)

WHEN source not matched then delete

The greatest thing is that all of these INSERT, UPDATE and DELETE operations are atomic in totality as a single MERGE statement being executed.

Friday, August 22, 2008

Change Data Capture (SQL Server 2008)

Performance Improvement of SQL Server

Today I want to discuss many things for you to improve the performance of your SQL Server based systems.

Hardware:

Use Faster Storage Technologies:

It is true that by writing efficient code, we can improve the performance. But this code has to run on hardware, so by using the efficient hardware, we can improve the performance of our systems. These days, we are using multi core processors and primary memory up to many giga bits per second. But the problem is that our database systems are based on the data stored in our persistent storage drives. The maximum speed of such devices is 7200 rpm. This proves to be a bottleneck. But being a software person, we are not concerned, generally, with the speed of our hard drives. But by just using a storage which becomes more responsive to the data requirements of our system, we can improve our systems many folds.

Having discussed about this, a question pops up in our minds. Can we increase the speed of our hard drives? The answer is YES. This can be done by using the drives which have higher speeds. Gone are the days in which the organizations had to use the magnetic storage drives because of their capacity, cost and reliability. These days’ organizations are moving towards solid state options for persistent storage. This storage have more random access ability. So they can process data much faster then their magnetic counterparts.

The only problem is that, these drives are not marketed as they deserve to be. But being an enthusiast, we should dig the technology to get the best out of it.

Software:

a. Efficient use of indexes

First thing first, Index does not mean improving performance for every operation. If there are more SELECT operations in a table, use index on columns. But if there are more inserts / updates / deletes, don't consider implementing it. This is because this would further slow down the process. These operations would not only be slow but would also create fragmentation problems.

b.Computed Columns:

This is another decision you have to take based on your scenarios. If you have more Insert / Updates then using computed columns would significantly reduce the performance of your system. Instead if your system involves more SELECT operations then use of computed columns would prove to be a step towards drastic performance improvement for your system.

c. Selecting the data types of columns in a table

This aspect is sometimes ignored whenever an improvement is discussed. Always consider the following points whenever you have to select a data type of one of your column.

• You should have very good reason not to use non-Unicode VARCHAR if you have to deal with character data. The reasons may be your requirement to store data more than 8000 bytes. There may be special Unicode characters which you have to use. Otherwise, you can do very fine with this data type.

• Always remember that integer based columns are much faster to sort any result set if used in an ORDER BY clause.

• Never use Text or BigInt data types unless you need extra storage.

• Using Integer based data type for Primary key would improve the performance compared to text based or floating point based ones.

d. Bulk Insert / Updates

Whenever Bulk Insert or updates are involved, selecting the recovery model to FULL or BULK LOGGED would slow down the process of the insert or update operation. So what you can do is that you change the recover model do your operation and then change the model back to the previous one.

Index could also create delay for these operations.

Always try to pass data as XML to your stored procedures and use OPENXML for BULK INSERT or UPDATE. This operation is then done through a single INSERT / UPDATE statement as a SET based operation. Avoid using DataAdapter's for update even if you are using updateBatchSize with it. This is because of the reasons: it is not SET based; still more than one UPDATE statements are passed to the SQL Server. This only decreases the roundtrip between your application and SQL Server. The numbers of UPDATE statements being executed on the server are the same. The maximum value for UPDATEBATCHSIZE is a limited value. But with XML option you can send the complete data of a DataTable.

e. Parameterized queries

You might have studied that compiled queries run faster. This is because the DBMS does not have to create a plan for the query for each operation. This also would help you as a precaution against SQL Injection attacks.

f. Stored procedures

Stored procedures also help in preventing the SQL Injection attacks. They also decrease the roundtrips between application and Database Server because many statements are combined in one procedure. This makes these bunch of statements as a single transaction. There are also many other benefits. But the specified ones are enough to use them as a first priority.

g. Stop lavishing use of explicit Cursors

This has been said by so many people so many times that this repetition is certainly not very necessary. I just tell you my example. Few years back I was given a stored procedure in which the previous developer had the lavishing use of the explicitly cursors. The execution time has risen to 40 minutes then because of increase of data in the database. This was expected to increase more as the data increases. By just replacing these cursors with some SET based operations; I was able to turn that execution time to 3 seconds. This was a great achievement on my part. By just looking at this example, you can find out that use of cursor is killing for the performance of your system.

Certified Scrum Master (Shujaat)

http://www.scrumalliance.org/profiles/37693-muhammad-shujaat-siddiqi

Wednesday, August 20, 2008

IN Clause (SQL Server)

SELECT * from

tblcustomer

WHERE

(ID, Name) in (

select CustomerID, CustomerName from tblCustomerOrders

)

In the above statement, we wanted to list the details of customers who have submitted some orders. When you run this statement in SQL Server (with the tables tblCustomer and tblCustomerOrders already created), an error is generated about this. The real reason is that SQL Server does not support more than one parameters in the IN clause.

Monday, August 18, 2008

Compound Assignment Operators (SQL Server 2008)

DECLARE @MyIntVar INT = 4

SET @myINTVar += 2

SELECT @myINTVar

Inline Initialization of Variables in SQL Server 2008

E.g.

DECLARE @myINTVar INT = 3

DECLARE @myVARCHARVar VARCHAR = LEFT('Shujaat',1)

SELECT @myINTVar, @myVARCHARVar

Table Valued Constructors using VALUES clause

Before 2008, when more than one rows were to be inserted using INSERT statement, then more than one INSERT statements were required. But with SQL Server 2008, it is not necessary because only one INSERT statement would do the work. Consider a table with three columns ID, Name and Address.

CREATE TABLE TblCustomer

(

ID int PRIMARY KEY IDENTITY,

Name VARCHAR(30),

Address varchar(45)

)

The single INSERT statement is as under:

INSERT INTO TblCustomer(Name, [Address] )

VALUES ('Shujaat', 'New Jersey'),

('Siddiqi', 'California'),

('Shahbaz', 'Los Angeles')

This can also be used for bulk insert requirements because of its atomic nature. After creating this statement in you .net code, you may pass this statement to the database. This INSERT statement is an atomic operation so there aren’t three statements being executed but only single statements in executed in the database.

This constructor may also be used for SELECT and MERGE statements. The example is as follows:

SELECT VendorID, VendorName

FROM

(

VALUES(1, 'Shujaat'),

(2, 'Siddiqi')

) AS Vendor(VendorID, VendorName)

Saturday, August 16, 2008

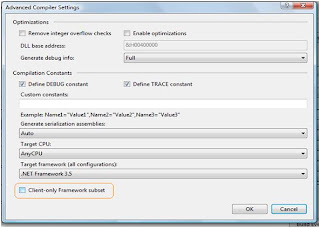

.net framework 3.5 client profile

The components includes in the Client profile are as follows:

1. Common Language Runtime

2. ClickOnce

3. Windows Forms

4. Windows Presentation Foundation

5. Windows Communication Foundation

Now you must be wondering if I have this profile installed then would I be able to install complete framework if at some later time I would want to upgrade the machine. Then the answer is YES.

But how should I prepare my project so as to target the Client Profile. Can I target to Client Profile as I target 2.0, 3.0 or 3.5 in my project settings? The answer is ‘Yes’. For a windows application take properties of your project. Go to ‘Compile’ tab and Click ‘Advanced Compile Option’ button. The following dialog box appears:

Now what would happen if I use any assembly which is not part of the profile? This would not be taken as ‘Error’ but this comes as ‘Warning’.

On the Client machine, you would have to install Client profile. The download is available on Microsoft download center. It must be remembered that Client profile based applications can be installed with Windows Installer or ClickOnce.

Thursday, August 14, 2008

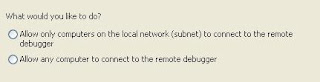

Visual Studio 2008 Remote Debugger

Since it talks about remote stuff so there must be some host computer running the debugger and there must be a remote computer running the application. The Debugging monitor (msvmon.exe) is installed on the remote computer. This remote process is then attached to the host computer through Visual Studio 2008 for debugging.

Host computer: Running debugger (Visual Studio 2008)

Remote Computer: Running application to debug and debug monitor (msvmon.exe)

There are two tool installed when Visual Studio 2008 Remote debugger is installed. They are as follows:

1. Visual Studio 2008 Remote Debugger Configuration Wizard

2. Visual Studio 2008 Remote Debugger

The remote debugger is configured through Visual Studio 2008 Remote Debugger Configuration Wizard. This is a separate utility. This tool allows us to specify how remote debugger should be run. Specifically all these questions are answered using this tool.

There are two options to run Remote Debugger.

1. as a service

2. as Windows application

Generally, the first option is used for server applications like ASP.net. Using this option, the one doesn't have to login on the server. We have to specify the username and password whose privileges this service would be using. Since the service is always running, it is not recommended for client applications as it will be always running if this option is used.

Remote debugger also requires some ports to be unblocked. The ports are as follows:

1. TCP port 135 (Used for DCOM)

2. UDP port 450 or 500)

The configuration should allow the remote debugger that it could receive relevant information from the network. There are two options:

The second option would allow the application debugging from outside the local network. This is an excellent option if we want to debug our application running on client side right from our office thousands of miles apart. This is very good option for organizations involved in off-shore development.

Surely we don’t want anyone else to start debugging our application. There are two options for this:

1. We can specify whether this feature is only available to all the users belonging to our local network or debugger may come through a public network. This is configured using Remote Debugger Configuration utility).

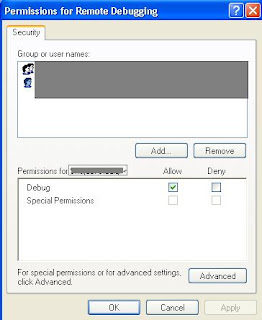

2. We can also specify which users / groups have permissions to run the debugger. As shown in the figure:

So this security arrangement is very refined. We can not define rights based on the applications i.e. we can not specify that it can debug Application1 and Application2 but he can not debug Application3.

The remote debugger is not supported in any edition of Visual Web Developer. For the visual studio, it is only supported in Pro and Team editions. This means you cannot run this on Express or Standard editions of Visual Studio 2008.

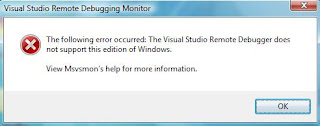

If your operating system is not configured properly, then the following error message appears:

There are a few questions to answer. The answers will prove to be the configuration setting of the remote debugger feature. The questions are as follows:

1. Should it be running as a service or an application?

2. Will the users on the local network would be debugging application or from any public network?

3. What changes in the network security would be required to allow this?

Tuesday, August 12, 2008

Midori ( A non-windows operating system by Microsoft)

With the advent of Web 2.0 and Cloud computing, this is all about sharing. This could be sharing of pictures, movies, games. But this could also be sharing of resources to make systems having capabilities which no one has ever thought of.

Midori is designed to be the future of Cloud computing. This would be a whole new operating system with architecture different from Windows. The architecture is named as Asynchronous Promise Architecture. The local and distributed resource would not be different for applications to consume. It is a net centric, component based operating system which is designed for connected systems. This would be completely developed in managed code. This would not depend upon the application installed on local systems. The hardware, on which the OS is run, would also not be a dependency. It is said that the core principal of Midori's design would be concurrency. This

would be an asynchronous only architecture designed for task concurrency

and parallel use of resources (both local and distributed). This would also

support different topologies, like Client-Server, Peer-to-Peer, multi-tier,

forming a heterogeneous mesh.

It appears that the operating system would be based on Service Oriented architecture with different components scattered in the cloud providing different services to the operating systems. Some say that the objects would be immutable like String in .net.

The kernel would be divided into two parts. One is Micro Kernel which is non-managed. This layer would be encapsulated in a managed Kernel services layer which would provide other functionalities. The scheduling framework is called Resource Management Infrastructure (RMI). This is a power based scheduler to support mobile devices.

The one thing that Microsoft will have to focus on is the co-existence of this new system with Microsoft Windows and applications designed to run on it. May be a runtime is provided just to support the applications designed to run on Microsoft Windows. When I am saying this, I mean COM based applications because managed applications would have no problem in running on a new CLR designed for Midori like we have MONO for Linux. So it can be seen that the applications software running on this, would be coded in .net languages.

Let's wait for the interesting things Microsoft releases about this new Operating System.

ClickOnce - Restore Application to previous state

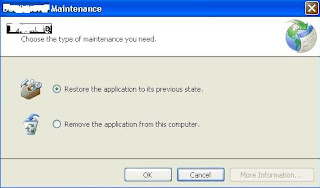

When an application is installed through ClickOnce then user has full control over reverting back to earlier version if he / she thinks that the current version should have not been installed. To do this user just has to go to Control Panel. Select the software and click Uninstall. As shown in the picture below, the user is asked about his selection whether he wants to completely uninstall the application or he wants to restore to the previous version.

Friday, August 8, 2008

Trace .net

Dim traceStream As System.IO.FileStream

traceStream = New System.IO.FileStream("c:\testingissue\test.log", FileMode.Create, FileAccess.Write)

TraceTextListener = New System.Diagnostics.TextWriterTraceListener(traceStream)

System.Diagnostics.Trace.Listeners.Clear()

System.Diagnostics.Trace.Listeners.Add(TraceTextListener)

System.Diagnostics.Trace.Write("Test Trace")

System.Diagnostics.Trace.Flush()

Friday, August 1, 2008

Joining with a result set returned from a UDF (CROSS AND OUTER APPLY)

set with a table in a SELECT query. How can I do that?

This question led me to a feature of T-SQL which is APPLY. This comes in

two flavors. They are as follows:

1. Cross Apply

2. Outer Apply

Both are used to join with the result set returned by a function. Cross

apply works like an INNER JOIN and OUTER apply works like LEFT OUTER JOIN.

Thursday, July 31, 2008

SPARSE COLUMN and COLUMN SET

Tuesday, July 29, 2008

Intellisence support in SQL Server 2008

I just executed the statement SELECT * FROM SYS.DATABASES. But this came as a price of execution of one more statement to the server. This is not unique to the SQL SERVER 2008. All those software which support intellisence services has to query the database to get the information about the object.

So if you have many database developers this might come as a cost to the Server instance. But this would also help you reduce the cost as the database developers become more productive with the support of intellisence.

I support the inclusion of this feature and admire it.

Wednesday, July 23, 2008

Batch Update ADO .net OPENXML

I wanted to use the Batch Update in one of my project and I explored the following information. This might be of interest to some of you.

I thought that using the Batch update option in .net 2.0 command, I can go with this. This is achieved using the rows in a dataset which are updated using a DataAdapter object. In reality one has to set the UpdateBatch size property to a specific value. But this is not the same. When i started the profiler, the trace showed that each statements are executed individually instead a single Update / Insert statement. When i studied the documentation, it became clear that Microsoft never claimed that a single statement would be executed. It says that a batch of statements are passed to SQL Server instead of individual statement. You can study the following article for further details:

http://msdn.microsoft.com/en-us/library/kbbwt18a(VS.80).aspx

But those who are like me and want that a single Update/ Insert statement is executed instead, have an alternate option. This is through the use of OPENXML feature of SQL Server. All the rows are provided to a stored procedure as a single parameter of XML. The parameter datatype is generally set as NText. For further details, please read the following:

http://en.csharp-online.net/XML_and_ADO.NET%E2%80%94OPENXML

After using this option, when you start your profiler trace, you should be satisfied that there is only a single Update statement being executed.

Are you happy now??

Functional Programming DataTypes F# .net

For compliance with .net, F# supports .net datatypes. But it considers them as mutable datatypes. It also introduces new datatypes, which are immutable ones. So with this we know that there are two categories of datatypes in F#, one is mutable and the other is immutable datatypes.

The immutable datatypes introduced by the language include tuples, records, discriminated unions and lists. A tuple is a collection which can hold more than one values. The number of values should be known at design time. Record is a specialized tuple in which each value may be named e.g. Age = 12. The list is a regular linked list. The discriminated union is like c style unions but it is typesafe. Like C, it defines a type whose instance may hold a value of any of the specified datatypes.

Though F# is a strongly typed language which used type inference. It deduces these datatypes during compilation.

Monday, July 21, 2008

Replication in SQL Server 2005

The benefits are increased availibility, load balancing and supporting remote clients.

The tools provided in SQL Server 2005 to support replication are as follows:

1- SQL Server Management Studio (SSMS)

2- SQL Server Replication Monitor (SSRM)

3- T-SQL replication stored procedures and RMO(s) Replication Management Objects.

There are three types of replication in SQL Server 2005.

1- Snapshot replication

2- Transactional replication

3- Merge Replication

There are different terminlogies which are necessary to discuss:

1- Article: This is the most basic unit of replication. It can be a table, view, etc.

2- Publication: This is a group of articles from the same database.

There are three roles which a server can play for replication. They are:

1- Publisher

2- Distributor

3- Subscriber

With SQL Server 2005, Microsoft did many changes to its replication technology.

like MOdifications in Replication Security agent. So if you have your replication scripts in SQL Server 2000 and you want to install SQL Server 2005 in your environment, update security settings.

Wednesday, July 9, 2008

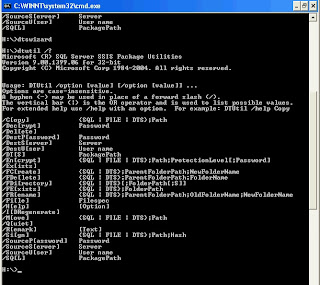

SSIS Utilities (DTSWizard, DTUtil)

2005. They are both SSIS based.

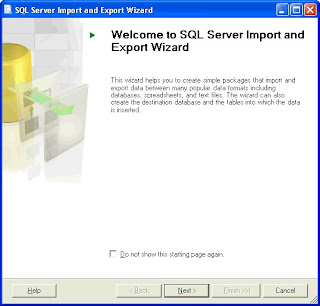

1. DTSWizard:

This is a wizard based import export utility. This creates a SSIS package,

executes it, copy it to file system or to database instance. This SSIS

package is for import / export data from many sources to SQL Server. I used

it to create a package to export data to excel file, which is successfully

did. I loved this utility.

You can start this utility by just typing DTSWIZARD in the command prompt.

2. DTUTIL:

This is also related to SSIS. This is about copying / moving SSIS packages

from / to SQL Server.

You can start this utility by typing DTUTIL from SQL Server. This is not

wizard based but it uses or creates SSIS package.

Sunday, June 15, 2008

Some info about Project Velocity CTP-1

Regions are local to any cache host.It may limiti the scalibility to the cache cluster.

There are three ports.

1- Cluster Port

2- Aribitration port

3- Cache port

Your application must implement the Cache Aside pattern. i.e. it may support its operation even if data is not available in the cluster or even if any server is not available.It should be able to access data directly from database.

There are two types of clients.

1- Simple:

2- Routing

A simple client has no routing capabilities. It also can not track where each cached object is stored.It can request data from only one cache host. If that host does not hold the data. It gets data from other cache host (if available) and presents it to the client.

Each client also has the option to store cached data locally.This feature is called Local Cache.The type of client is configured in the configuration file.

The configuration in the application, so that clients may access the cluster, is maintained in Application Configuration file (app.config)

The configuration of cluster is maintained in ClusterConfig.xml file. This file presents a single point of failure in Velocity which is not yet taken care of (Till CTP1)

Objects are serialized before they are stored on cache host. Before it is used by the client, it must be deserialized. So it is recommended to have a local cache.

Concurrency Model:

Velocity supports the following concurrency models.

1- Optimistic

2- Pessimistic

Cache Expiration and Eviction:

The expiration is configured on named cache level in ClusterConfig.xml file. A TTL (Time to Live) may be associated with an object at the time of "Put".

Eviction is done by using LRU (Least Recently Used) algorithm.

Tuesday, June 10, 2008

Sample code for FTP client using System.net (FTPWebRequest)

Imports System.Text

Imports System.Net

Imports System.IO

Public Class FTPClient

Private UserID As String

Private Password As String

Public Sub New(ByVal UserID As String, ByVal Password As String)

Me.UserID = UserID

Me.Password = Password

End Sub

Public Sub uploadFile(ByVal URI As String, ByVal UploadFileName As

String, ByVal LocalPath As String, ByVal LocalFileName As String, Optional

ByVal FTPUserID As String = "", Optional ByVal FTPPassword As String = "")

Dim completePath = LocalPath + "/" + LocalFileName

Dim fileInf As FileInfo = New FileInfo(completePath)

If UploadFileName = "" Then

UploadFileName = LocalFileName

End If

If FTPUserID = "" Then

FTPUserID = Me.UserID

End If

If FTPPassword = "" Then

FTPPassword = Me.Password

End If

Dim MyURI As String = URI + "/" + UploadFileName

Dim reqFTP As FtpWebRequest

Dim buffLength As Integer = 2048

Dim buff(buffLength) As Byte

Dim contentLen As Integer

Dim response As FtpWebResponse = Nothing

Dim fs As FileStream = fileInf.OpenRead()

Dim strm As Stream = Nothing

Try

reqFTP = CType(FtpWebRequest.Create(New Uri(MyURI)),

FtpWebRequest)

reqFTP.Credentials = New NetworkCredential(FTPUserID,

FTPPassword)

reqFTP.KeepAlive = False

reqFTP.Method = WebRequestMethods.Ftp.UploadFile

reqFTP.UseBinary = True

reqFTP.ContentLength = fileInf.Length

reqFTP.UsePassive = True

response = CType(reqFTP.GetResponse(), FtpWebResponse)

strm = reqFTP.GetRequestStream()

contentLen = fs.Read(buff, 0, buffLength)

While (contentLen <> 0)

strm.Write(buff, 0, contentLen)

contentLen = fs.Read(buff, 0, buffLength)

End While

Finally

strm.Close()

fs.Close()

response.Close()

End Try

End Sub

Public Function GetFileList(ByVal URI As String, Optional ByVal

FTPUserID As String = "", Optional ByVal FTPPassword As String = "") As

String()

Dim downloadFiles() As String

Dim result As StringBuilder = New StringBuilder()

Dim reqFTP As FtpWebRequest = Nothing

Dim response As WebResponse = Nothing

Dim reader As StreamReader = Nothing

If FTPUserID = "" Then

FTPUserID = Me.UserID

End If

If FTPPassword = "" Then

FTPPassword = Me.Password

End If

Try

reqFTP = CType(FtpWebRequest.Create(URI), FtpWebRequest)

reqFTP.UseBinary = True

reqFTP.Credentials = New NetworkCredential(FTPUserID,

FTPPassword)

reqFTP.Method = WebRequestMethods.Ftp.ListDirectory

response = reqFTP.GetResponse()

reader = New StreamReader(response.GetResponseStream())

Dim line As String = reader.ReadLine()

While Not line Is Nothing

result.Append(line)

result.Append("\n")

line = reader.ReadLine()

End While

result.Remove(result.ToString().LastIndexOf("\n"), 1)

downloadFiles = result.ToString().Split("\n")

Catch ex As Exception

downloadFiles = Nothing

Finally

reader.Close()

response.Close()

End Try

Return downloadFiles

End Function

Public Sub downloadFile(ByVal Uri As String, ByVal ToDownLoadFileName

As String, ByVal LocalPath As String, Optional ByVal LocalFileName As

String = "", Optional ByVal FTPUserID As String = "", Optional ByVal

FTPPassword As String = "")

Dim result As StringBuilder = New StringBuilder()

Dim reqFTP As FtpWebRequest = Nothing

Dim response As FtpWebResponse = Nothing

Dim reader As StreamReader = Nothing

Dim ftpStream As Stream = Nothing

Dim outputStream As FileStream = Nothing

If FTPUserID = "" Then

FTPUserID = Me.UserID

End If

If FTPPassword = "" Then

FTPPassword = Me.Password

End If

If LocalFileName = "" Then

LocalFileName = ToDownLoadFileName

End If

Try

outputStream = New FileStream(LocalPath + "\\" + LocalFileName,

FileMode.Create)

reqFTP = CType(FtpWebRequest.Create(Uri + "/" +

ToDownLoadFileName), FtpWebRequest)

reqFTP.UseBinary = True

reqFTP.Credentials = New NetworkCredential(FTPUserID,

FTPPassword)

reqFTP.Method = WebRequestMethods.Ftp.DownloadFile

response = CType(reqFTP.GetResponse(), FtpWebResponse)

ftpStream = response.GetResponseStream()

Dim cl As Long = response.ContentLength

Dim bufferSize As Integer = 2048

Dim readCount As Integer

Dim buffer(bufferSize) As Byte

readCount = ftpStream.Read(buffer, 0, bufferSize)

While readCount > 0

outputStream.Write(buffer, 0, readCount)

readCount = ftpStream.Read(buffer, 0, bufferSize)

End While

Finally

ftpStream.Close()

outputStream.Close()

response.Close()

End Try

End Sub

End Class

Monday, May 26, 2008

SharpZip Library .net

http://codecruncher.blogsome.com/2007/01/04/37/

Still SharpZip is not available for .net 3.5. So guyz wait for this.

Sample Code Word 11.0 Object Library

Microsoft.Office.Interop.Word.Document doc = new Microsoft.Office.Interop.Word.Document();

ApplicationClass myWordApp = new ApplicationClass(); // our application

object nothing = System.Reflection.Missing.Value; // our 'void' value

object filename = "C:/TestDoc/TestDot.dot"; // our word template

object destination = "C:/TestDoc/MyNewDocument.doc"; // our target filename

object notTrue = false; // our boolean false

myWordApp.Visible = false;

doc = this.myWordApp.Documents.Add(ref filename, ref nothing, ref nothing, ref nothing);

doc.WebPagePreview();

doc.SaveAs(

ref destination,

ref nothing,

ref nothing,

ref nothing,

ref nothing,

ref nothing,

ref nothing,

ref nothing,

ref nothing,

ref nothing,

ref nothing,

ref nothing,

ref nothing,

ref nothing,

ref nothing,

ref nothing);

Office Programming

I recommend reading about these.

Visual Studio Tools for Office.

Microsoft Word Object Library

Microsoft Excel Object Library

Just search these on the google and read the wonderful information on the internet about these.

BCC in VSTO for outlook

Outlook.Recipient rcp = ((Outlook.MailItem)Item).Recipients.Add("shujaatned@gmail.com");

rcp.Type = 3;

((Outlook.MailItem)Item).Recipients.ResolveAll();

Here Outlook.Recipient.Type = 3 means that this a BCC recipient.

This took me all day to find out. But I dont know why MailItem.BCC does not work.

Friday, May 2, 2008

DDL trigger for auditing DDL statments running on SQL Server

For keeping a record, it is better to create a table.

CREATE TABLE [dbo].[DDL_OBJECT_VERSION](

[EVENT_TYPE] [varchar](100) COLLATE SQL_Latin1_General_CP1_CI_AS

NULL,

[POST_TIME] [datetime] NULL,

[LOGIN_NAME] [varchar](100) COLLATE SQL_Latin1_General_CP1_CI_AS

NULL,

[USERNAME] [varchar](100) COLLATE SQL_Latin1_General_CP1_CI_AS NULL,

[DATABASE_NAME] [varchar](100) COLLATE SQL_Latin1_General_CP1_CI_AS

NULL,

[SCHEMA_NAME] [varchar](100) COLLATE SQL_Latin1_General_CP1_CI_AS

NULL,

[OBJECTNAME] [nchar](100) COLLATE SQL_Latin1_General_CP1_CI_AS NULL,

[OBJECT_TYPE] [varchar](100) COLLATE SQL_Latin1_General_CP1_CI_AS

NULL,

[SQL_TEXT] [varchar](max) COLLATE SQL_Latin1_General_CP1_CI_AS NULL,

[SERVER_NAME] [varchar](100) COLLATE SQL_Latin1_General_CP1_CI_AS

NULL

) ON [PRIMARY]

The context information can be obtained by EVENTDATA() function availble in DDL trigger. The format of XML returned by this function is as follows:

ANSI_PADDING="ON"

QUOTED_IDENTIFIER="ON"

ENCRYPTED="FALSE" />

[AddressID] [int]

IDENTITY (1, 1)

NOT FOR REPLICATION NOT NULL,

[AddressLine1] [nvarchar](60) NOT NULL,

[AddressLine2] [nvarchar](60) NULL,

[City] [nvarchar](30) NOT NULL,

[StateProvinceID] [int] NOT NULL,

[PostalCode] [nvarchar](15) NOT NULL,

[rowguid] uniqueidentifier ROWGUIDCOL NOT NULL CONSTRAINT

[DF_Address_rowguid] DEFAULT (NEWID()),

[ModifiedDate] [datetime] NOT NULL CONSTRAINT

[DF_Address_ModifiedDate] DEFAULT (GETDATE())

) ON [PRIMARY];

You can access invidual information with XQuery support available in SQL Server. The example code of DDL trigger is as follows:

CREATE TRIGGER [DDL_TRIG_OBJ_VERSIONS]

ON DATABASE

FOR DDL_DATABASE_LEVEL_EVENTS

AS

SET NOCOUNT ON

DECLARE @data XML;

SET @data = EVENTDATA();

insert INTO [DBO].[DDL_OBJECT_VERSION](

EVENT_TYPE,

POST_TIME,

SERVER_NAME,

LOGIN_NAME,

USERNAME,

DATABASE_NAME,

SCHEMA_NAME,

OBJECTNAME,

OBJECT_TYPE,

SQL_TEXT

)

VALUES(

@data.value('(/EVENT_INSTANCE/EventType)[1]','sysname'),

GETDATE(),

@data.value('(/EVENT_INSTANCE/ServerName)[1]','sysname'),

@data.value('(/EVENT_INSTANCE/LoginName)[1]','sysname'),

@data.value('(/EVENT_INSTANCE/UserName)[1]','sysname'),

@data.value('(/EVENT_INSTANCE/DatabaseName)[1]','sysname'),

@data.value('(/EVENT_INSTANCE/SchemaName)[1]','sysname'),

@data.value('(/EVENT_INSTANCE/ObjectName)[1]','sysname'),

@data.value('(/EVENT_INSTANCE/ObjectType)[1]','sysname'),

@data.value('(/EVENT_INSTANCE/TSQLCommand)[1]','VARCHAR(max)')

);

Wednesday, April 30, 2008

Operation causing trigger to fire

But there are workarounds through which you can achieve the same result. For the work around you must remember the INSERTED and DELETED tables available in a DML trigger.

What you can do is to find out the count on these two tables .

Select @InsertedCount = count(*) from INSERTED;

Select @DeletedCount = count(*) from DELETED;

where the variables are declared as:

Declare @InsertedCount, @DeletedCount int;

Now if @InsertedCount > 0 and @DeletedCount = 0, it means that it is an insert operation.

if @InsertedCount = 0 and @DeletedCount > 0, it means that it is a delete operation.

If @InsertedCount > 0 and @DeletedCount > 0, it means that it is an update operation.

The last condition is if @InsertedCount = 0 and @DeletedCount = 0. This is not a hypothetical situation. But a real situtation. This situation can occur as a result of Update or Delete query which has selected no record to modify / delete because of the selection criteria in WHERE clause.

INSERTED / DELETED tables in a SQL Server trigger

The above theory about number of records is true when the insert / update/ delete operation result in some rows being inserted/ updated/ deleted. So if no record is selected because of the criteria of delete or update statement. Then even in case of delete or update both tables i.e. INSERTED and DELETED would be empty. But if you would have to log the activity then you would not want to log the attempt which has modified no record.

I used this approach to find out the operation which has caused the trigger to fire. The trigger was set to fire for insert, update or delete operation. I had to log each modification of data. The above approach of record cound in INSERTED and DELETED table worked perfectly fine for me.

I will be coming with more blogs about SQL server trigger in coming days.

1-